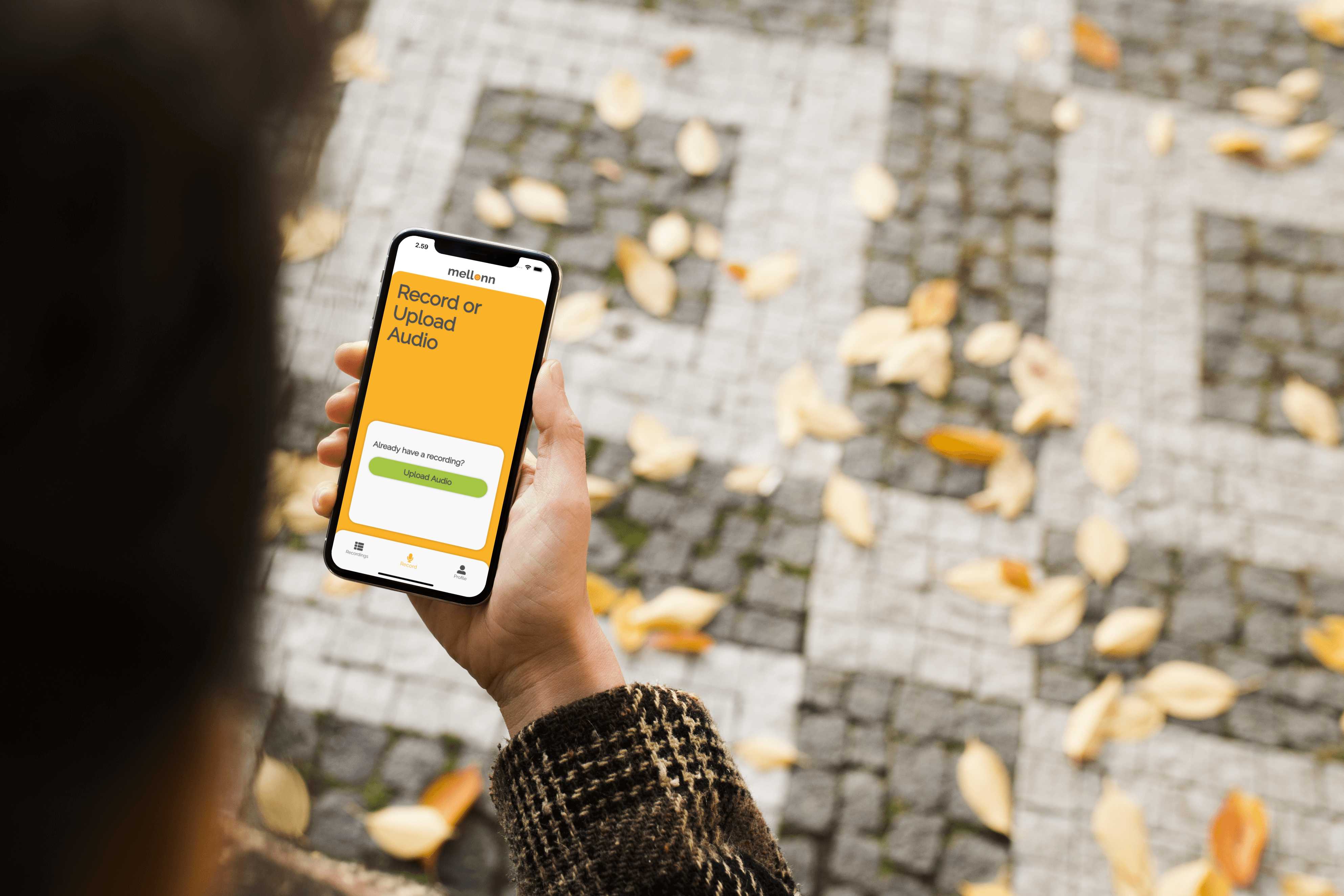

Photo Credit: Joakim Rosenfeldt

Joakim Rosenfeldt Pedersen

Updated January 21, 2022

My first blog post!

Exciting, right?!

There’s so much happening for me right now, so I’ve decided to start documenting the project, by writing a blog post every week from now! In this first one, my only goal is to catch you up, with what I’ve been doing for the last half (ish) year.

What is Mellonn?

Great question, glad you asked! Mellonn is my company, yes mine. With Mellonn I’m envisioning a future, in which everyone have easy access, to some of the most advanced AI technology. As a start Mellonn won’t be creating its own AI, but in the future I will definitely begin to explorer those options. This means that I’m right now utilising AWS (Amazon), to deliver an absolutely excellent experience. But of course, this experience could be much better, and much more customisable, by Mellonn creating it’s own AI.

What is this experience?

Can you read my mind? Cause you’re asking all the right questions! This experience I’m talking about, is also my first project with Mellonn. To get an idea for a good experience, you first have to have a bad experience. I’ll have to take you back to that bad experience, for me to explain this experience I’m creating, let’s roll back the time!

It’s 2019, everything’s great, and I’m warming up to write the biggest assignment of my life… The SOP. For this assignment I chose to write about Proshop’s use of drones in their TV-commercials. This thing isn’t really important for the story, but it’s a cool subject nonetheless. The important thing in this story, is the fact that I had to interview the marketing director in Proshop. This interview… It wasn’t scary at all, in fact, it was great! But what came after, is a whole other story.

Having had an interview, that will be needed in an assignment, means that I had to transcribe the whole thing. That! That is what I call scary! And it was only 20 minutes, but that thing took three hours of my time and my sanity to transcribe. Transcribing an interview is a thing i do not wish upon my absolutely worst enemy, but it’s something we all probably have to deal with at some point in our lifes. Do you see where this is going?

Half a year later, I get the absolute genius idea to make an app that can do it for, when I’m eventually going to university. But Joakim, you said that you’ve worked on this for half a year, and this was back in 2019? Yeah, did I tell you I’m also a master procrastinator?

In the summer of 2021, I’m really beginning to think of the whole experience I want to create, and most importantly what I do not want to experience. I get the idea for an app, that can make this whole process of transcribing, take much less time. The goal I’m going for is for it to take double the time of the interview. This means you’ll have to listen to the interview twice, instead of a bijillion time, that is scientific.

The project

With you having a quite decent idea of what Mellonn is and the idea behind it, I will now try and catch you up on the project. Having worked on it for about half a year now, it would be a shame to say a lot haven’t already happened. Let’s just take it from the start.

Choosing the framework

When wanting to create an app, you’re met by a big and overwhelming choice, how do you want to create the app? The first question, multi platform or native?

Being the lazy person that I am, I quickly decided that I would use a multi platform framework, since it’s easy and quick to develop to all the mainstream platforms in one go. But then the next question comes and slaps you in the face, what framework to use? To kill the suspense, I use Flutter. I chose Flutter over React native, because it’s exciting, new, and it looked much easier than React Native.

Flutter for me as a more design oriented type, just spoke to me different, with the marketing focusing on beautiful and smooth design, and then native performance. While React Native focuses more on writing for multiple platforms at once. Just go to React Native’s website and then Flutter’s, and the tell me which one speaks most to you. I’ll help you, it’s Flutter’s.

React Native · Learn once, write anywhere

Flutter - Build apps for any screen

Beginning the UX design process

What can I say, I want to make my design teacher proud. So for once in my life I created a design, before creating the thing. I at first procrastinated, by going to Fiverr to get someone to design a logo for Mellonn. This was absolutely worth it.

With a logo, and therefore some colours to work with, I had no more excuses to not begin designing the UX. This of course meant that I now had to learn a new program from Adobe, XD, what an amazing piece of software! This thing can create rounded corners and shadows for life!

After an evening of tickling with XD, I had something I could call a pretty good starting point, to begin developing an app. And after that I could turn a little down for the rounded corners and shadows again, because that got a little overboard.

Beginning of the development to now

It actually didn’t take me that long to recreate the design in Flutter. The rest of the time has been used to get the backend to work well, as with every other thing in the universe. With having to create a backend to the app, I chose to use what Amazon have created, AWS Amplify. Absolutely amazing piece of art, most of the time, when it works…

Amplify has definitely been the thing that has taken the most of my time. But why have I chosen to use Amplify instead of something like Firebase? The answer is quite simple, AWS Transcribe. AWS Transcribe supports one very important feature, more widely than Google Cloud, Speaker diarization. The act of recognising who says what, the single most important thing for this app.

Now how does this work? When a user opens the app, they will be prompted to log in or create an account, this uses Amplify Auth. Then they will be taken to the record page, for now it’s just an upload page. Here they can upload an audio clip to be transcribed, give it a title and description, and then the most important thing: PAY UP.

Now that audio clip is upload to their private storage with Amplify Storage. The info about their recording is stored in Amplify DataStore. When going to the recordings page, these DataStore elements will be loaded, and displayed on this page.

While you can see this in the app, there’s some things going on in the background. When an item (audio file) is uploaded in the Amplify Storage, there’s a Lambda script getting triggered. This script will take that audio file and get the info from DataStore, to start a Transcribing job.

Once this job is done, there’s another script getting triggered, which will update the status of the DataStore element. Now in the app, this recording will be displayed as done, and the user can now see the result. Seamless? Yes.

At least i wish it was. It isn’t quite ready yet, but it’s definitely getting there, make sure to stay updated here!

Now we have the AI out of the way, the next feature of the app, is the editing of that transcript. Of course the result from the AI isn’t perfect, but it’s a great start! Now to make the worst part easier, I’m making an editing page, where the user can finely tune who talks when. And THIS, I’ve just gotten to work. This wasn’t an easy thing to do, but this is what can make or break the app, and I think it’s an awesome feature.

The next step here, is to create a way for the user to be able to edit the text in the transcript. With these two features I’m sure that I will have the absolute best tool, to quickly transcribe interviews. Other than that, I will of course always have to find ways of improving the usability of the editing tools and the app.

This is pretty much the point of which I am right now. But with me working full time on the project right now and for the coming weeks, I’m making a lot of progress. And TODAY I’m officially beginning the closed testing of the app!

What happens next?

The most important questions of all the important questions. Well, as stated in the start, I will try and create a blog post every week from now, with updates on how things are going. We’ll see how long this lasts.

I will be working hard on getting the app ready for a full release, within the next month or two. But this will depend heavily on wether I get an actual job or not in the meantime. If I don’t then hurray for the app progress, but not that hurray for my bank balance.

Be sure to keep yourself updated here on the website. I will also post updates on both LinkedIn and Twitter, with more frequent updates on Twitter of course.

See y’all next week…